Every conversation about AI eventually circles back to the same haunting question:

Can machines really think?

It isn’t new. Alan Turing posed it in 1950, and decades later, philosopher John Searle reignited the debate with his “Chinese Room” thought experiment. In between, early chatbots like ELIZA fooled people into thinking they were talking to something more than a clever script. Today, with large language models powering customer service, search engines, and even creative tools, the same debate feels sharper than ever.

Alan Turing and the Birth of the Question

Alan Turing – best known for his role in cracking the Enigma machine – turned his mind to an equally difficult puzzle in his paper Computing Machinery and Intelligence.

He reframed the question “Can machines think?” into a practical test: if a machine’s answers are indistinguishable from a human’s, should we call it intelligent?

This idea, now known as the Turing Test, was less about defining intelligence in absolute terms and more about shifting the focus to behavior. Instead of debating metaphysics, Turing asked us to look at interaction.

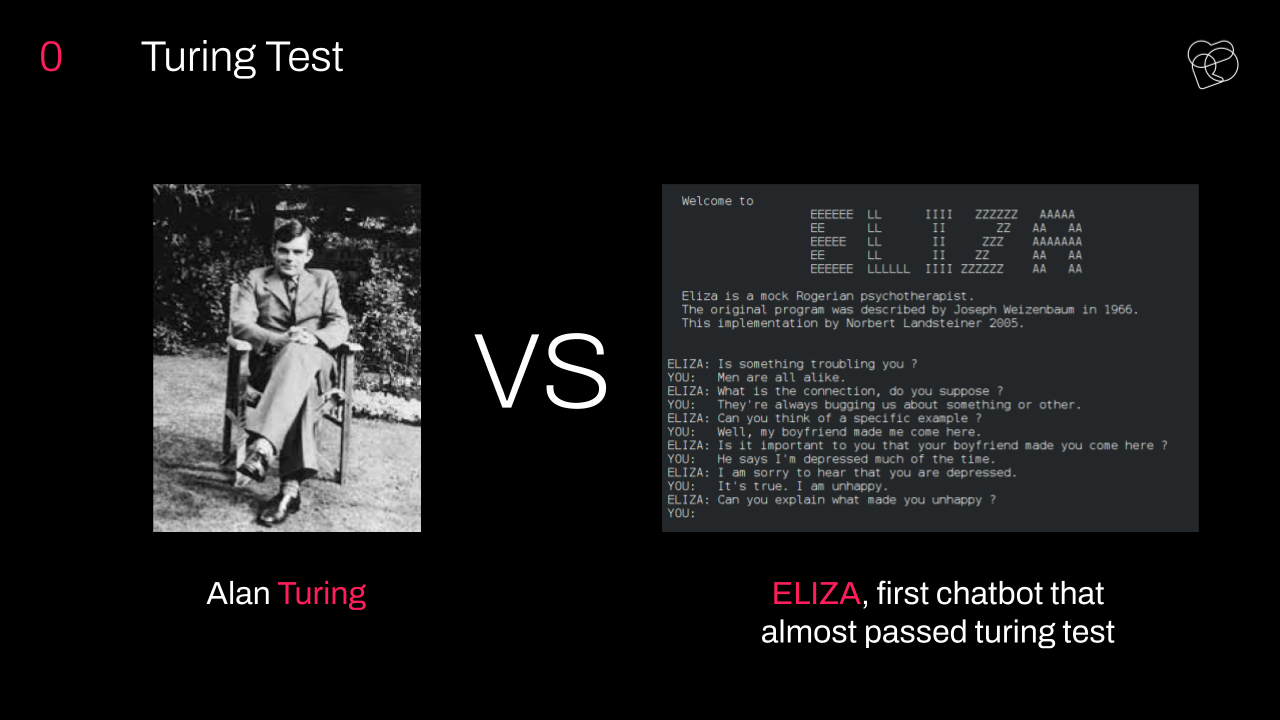

The First Chatbot: ELIZA

Jump to the 1960s, when Joseph Weizenbaum created ELIZA. The program mimicked a psychotherapist by turning users’ words back on them with simple rules. Type in “I’m feeling sad,” and ELIZA might reply, “Why are you feeling sad?”

What surprised even Weizenbaum was how quickly users felt understood. Some poured out personal stories to ELIZA, despite knowing it was just a program. This revealed something profound: imitation can be persuasive, even without genuine intelligence behind it.

The Chinese Room Argument

But not everyone bought into behavior as proof of thought. In the 1980s, philosopher John Searle proposed the Chinese Room. Imagine a person who doesn’t know Chinese locked in a room with a rulebook. Notes in Chinese come in; the person follows the rules to produce replies that look fluent. To outsiders, it seems like the room “understands” Chinese. But inside, no one actually knows the language.

Searle argued this is what computers do: manipulate symbols without true understanding. Passing the Turing Test doesn’t mean the machine thinks – it only means it imitates.

Strong AI vs. Weak AI

Here lies the divide introduced by John Searle.

- Weak AI: Machines simulate intelligence to complete tasks (like chatbots or translation software).

- Strong AI: Machines would have genuine minds, with understanding and consciousness.

Most of what we call “AI” today is firmly in the weak category. ChatGPT, Claude, Gemini – they’re statistical pattern-matchers, not conscious entities. They can generate fluent text, but they don’t “know” in the human sense.

Why Imitation Still Matters

Critics often dismiss imitation as shallow, but imitation has power. ELIZA showed it decades ago. Modern chatbots show it at scale. If a virtual agent resolves your issue faster than a call center, do you care if it “understands”?

For businesses, the question isn’t whether AI has a mind, but whether it can solve problems reliably, safely, and at scale. The philosophical debates enrich our understanding, but the commercial stakes lie elsewhere: usability, trust, and performance.

This brings us to another dimension of the debate: scale. If machines keep getting faster and bigger, does that bring them closer to true thought?

Brains, Computers, and Scaling Power

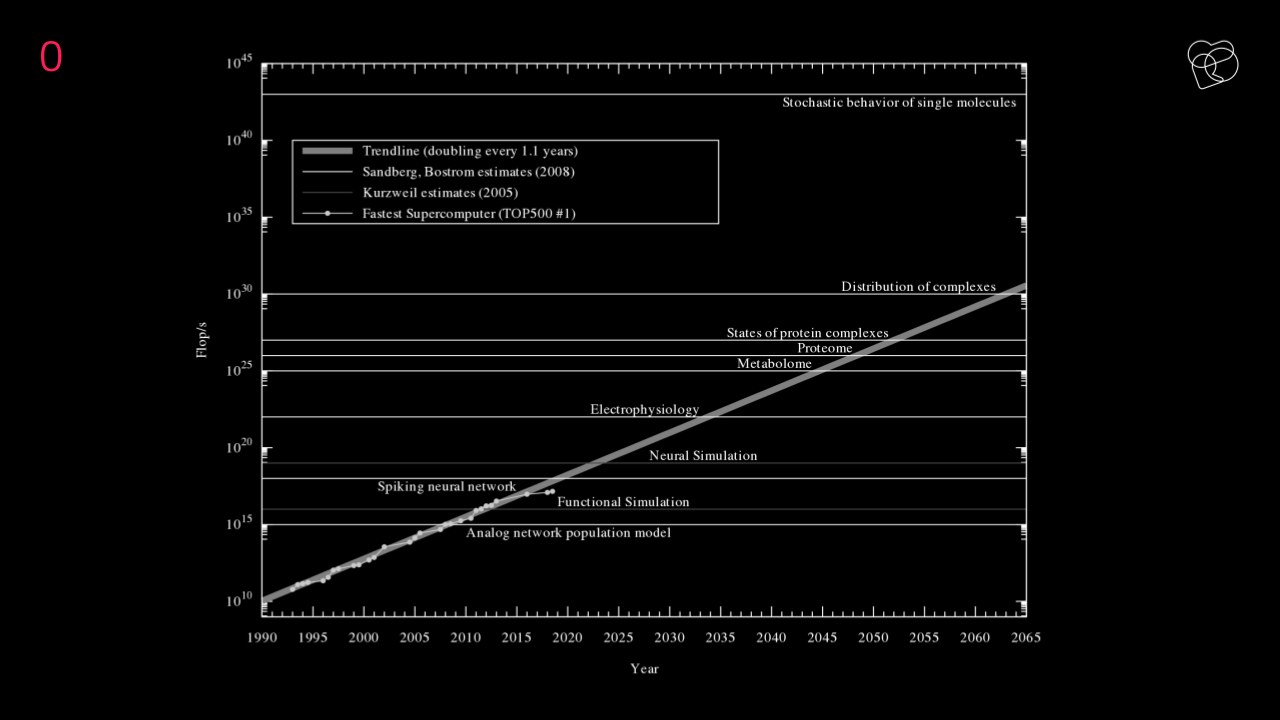

Modern AI often dazzles us with sheer scale – billions of parameters and trillions of operations per second. But does more raw computing power equal greater intelligence? History suggests otherwise. Moore’s Law and futurists like Ray Kurzweil have highlighted exponential growth in processing power. Supercomputers double their FLOPs at astonishing speed, outpacing what was once thought biologically feasible.

Yet intelligence isn’t just FLOPs. The image below illustrates this tension perfectly: projections of computational power rising against the backdrop of biological complexity. A spiking neural network or electrophysiology experiment doesn’t map cleanly to human reasoning, and even surpassing neuron counts doesn’t guarantee adaptability or creativity. This gap grounds today’s debates: machines may imitate thinking, but can they ever replicate it in essence?

The Architecture of Intelligence: Turing vs. von Neumann

If Alan Turing gave us the philosophical foundation of AI with the Turing Test, John von Neumann gave us its technical backbone. Turing asked whether machines could “think,” reframing the puzzle into computability: what can be calculated? Von Neumann, meanwhile, provided the architecture that almost every modern computer still uses – memory, processing, input, output – a structure that made Turing’s theories actionable.

The images below capture this duality. In his book “The Computer and the Brain”, von Neumann argued that unlike computers, the human brain doesn’t rely on arithmetic precision. That insight is striking today, when machine learning models burn through staggering amounts of precise calculation to approximate what biological systems achieve through flexibility.

Are we, then, brute-forcing intelligence in ways evolution never did? The juxtaposition of Turing’s abstraction and von Neumann’s machinery forces us to consider whether AI’s future lies in scaling current methods or rethinking them altogether.

Wrap-Up

So, can AI really think? If by “think” we mean subjective experience, then no. If we mean problem-solving and communication, then yes – and increasingly well. Turing, Searle, and Weizenbaum all left us with ways to frame the debate. Today, the challenge is finding the balance: acknowledging the limits of imitation, while recognizing its enormous practical value.

AI might not think like us, but it forces us to rethink what thinking really means.