Why Intelligence Is So Hard to Define

Intelligence feels like an easy word until you try to pin it down. Is it about brain size, number of neurons, problem-solving, or awareness of the self? Different disciplines give different answers. Psychologists study reasoning and memory. Neuroscientists point to networks of neurons. Evolutionary biologists highlight adaptability in the wild.

And now, with artificial intelligence, computer scientists add yet another angle: benchmark scores, training data, and model parameters. At S-PRO, we work with AI daily, and we often encounter this very question from clients – what does it mean for a system to be “intelligent”? It isn’t an abstract puzzle. The answer shapes how AI products are designed, tested, and deployed.

Philosophers, neuroscientists, and AI researchers have all tried to pin down what we mean by intelligence. Richard Dawkins once described it as the ability to adapt to new situations, learn from experience, and apply abstract concepts to interact with the environment. It’s less about having a big brain and more about being flexible in thought and action.

Nicklas Boström, a leading thinker on AI, divides intelligence into four levels: human, artificial, and the yet-to-be-seen “superhuman” intelligence that might one day surpass our own. This ladder reminds us that definitions matter. When we talk about “AI,” we’re already stretching the word to cover systems that calculate, predict, and sometimes even surprise us – but they still don’t think like we do.

Fluid vs. Crystallized Intelligence

Psychologist Raymond Cattell introduced a useful distinction: Fluid vs. Crystallized intelligence. Fluid intelligence is problem-solving in real time – like figuring out a puzzle or navigating an unfamiliar city. Crystallized intelligence, by contrast, is built on knowledge: the facts, vocabulary, and experiences we accumulate.

Fluid intelligence is the ability to solve new problems, spot patterns, and adapt. Crystallized intelligence is accumulated knowledge – facts, skills, and learned routines. Humans use both. A child figuring out a novel puzzle shows fluid intelligence, while recalling history lessons in school draws on crystallized knowledge.

Animals demonstrate both in surprising ways. A raven bending a wire to access food is fluid intelligence in action. A dog following dozens of trained commands relies on crystallized knowledge. Machines, however, remain heavily weighted toward crystallized intelligence. They reproduce patterns from training data with extraordinary accuracy but often struggle with true adaptability.

In business terms, this distinction is critical. Machines today excel at crystallized intelligence. Databases and large language models can store and recall enormous amounts of information. But fluid intelligence – the ability to adapt in new, uncertain contexts – remains harder for AI. This distinction explains why your phone can give you every capital city on Earth, but still struggles with a tricky riddle.

When we design AI solutions, we help clients clarify whether they need crystallized systems (like knowledge retrieval or document automation) or fluid capabilities (like adaptive decision support). Treating them as the same leads to mismatched expectations and failed projects.

Does Neuron Count Equal Intelligence?

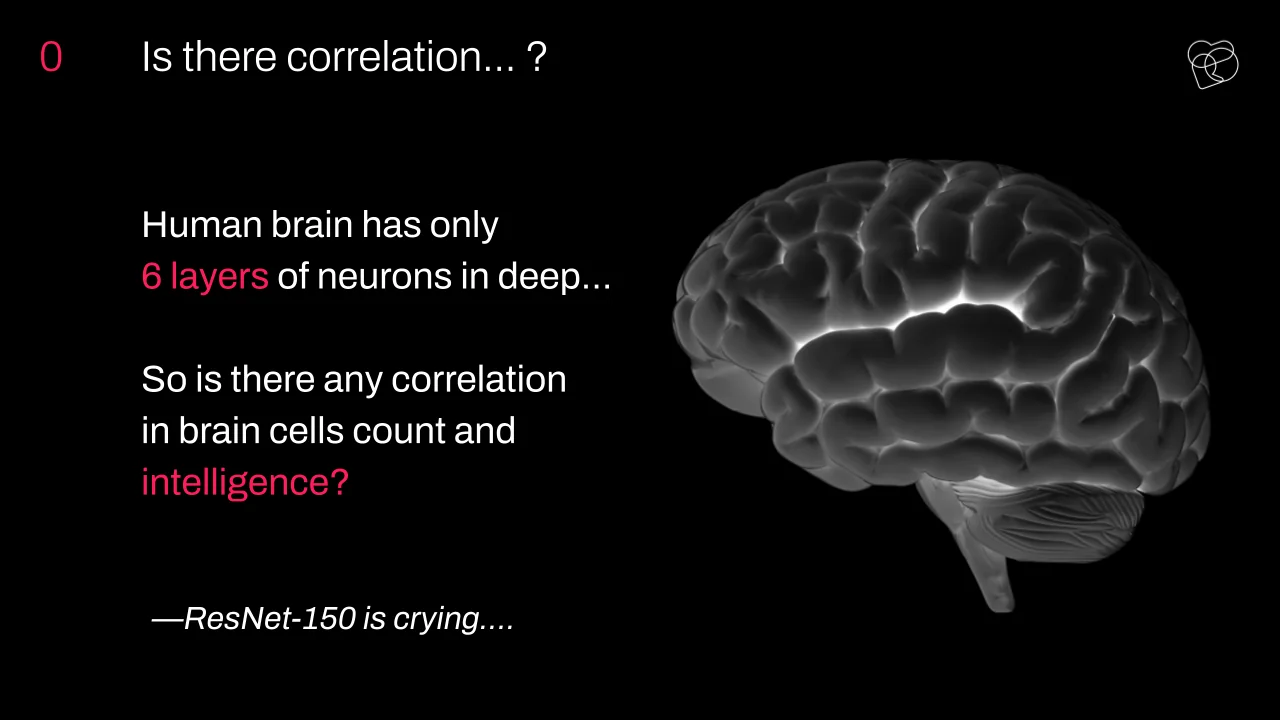

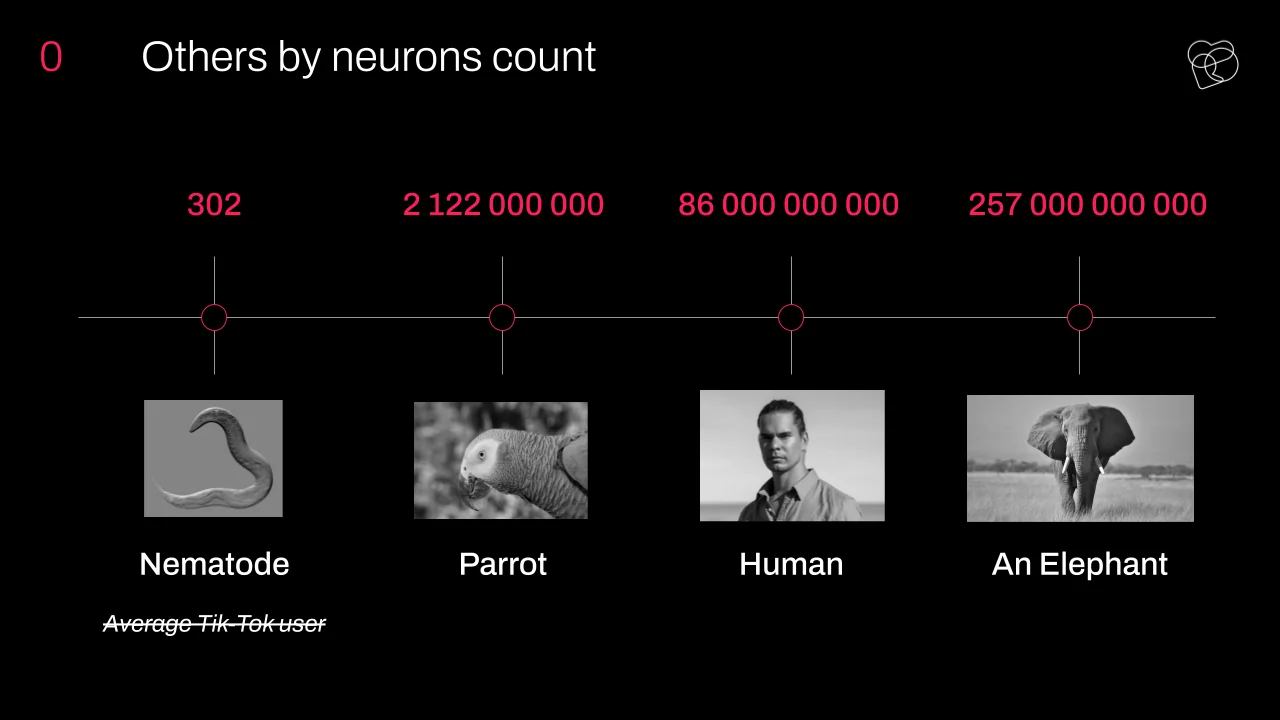

It’s tempting to assume more neurons equal more intelligence. After all, the human brain has around 86 billion neurons, spread across 6 layers in the cortex. But biology complicates this neat story.

Take Aplysia depilans, a sea slug with just 20,000 neurons. Despite its modest count, it can learn, escape predators, and seek food. Its nervous system is simple enough that scientists have mapped many of its circuits in detail, making it a model for studying memory.

Or consider parrots. With about two billion neurons, far fewer than humans or elephants, parrots can mimic speech, solve problems, and even invent tools. Meanwhile, elephants boast a staggering 257 billion neurons – yet we don’t see them writing symphonies or building skyscrapers. Clearly, neuron count is not the whole story.

This matters for AI as well. Modern models now have billions of artificial “neurons.” Yet scale alone doesn’t make them adaptable, creative, or conscious. For companies building with AI, the lesson is clear: bigger models are not automatically smarter ones. Architecture, training data, and application context matter just as much as size.

Consciousness vs. Intelligence

Even with these categories, another big divide emerges: intelligence is not the same as consciousness.

Intelligence is about solving problems. Consciousness is about subjective experience – the feeling of what it’s like to be. Where does one begin and the other end?

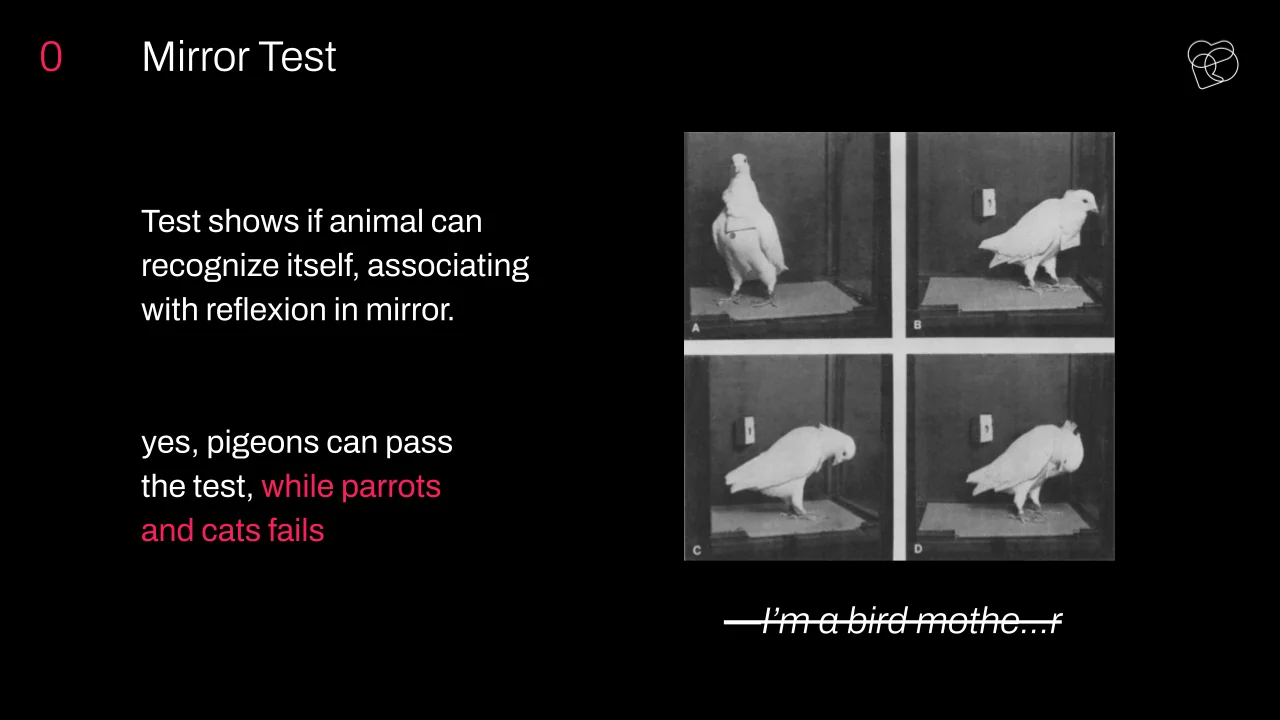

Scientists have tried to probe this with the Mirror Test, which checks whether an animal can recognize itself in a reflection. Humans usually pass it at around 18 months, recognising themselves in a reflection. Dolphins, elephants, and even pigeons sometimes pass the test. Cats, pigeons, parrots, on the other hand, usually fail. Does this make cats less intelligent? Or does it show that self-recognition is just one narrow way of measuring awareness? Passing doesn’t prove consciousness, but it shows some level of self-recognition.

Philosopher David Chalmers calls this the Hard Problem of Consciousness: explaining why physical processes in the brain give rise to subjective experience. Why does processing light make us see color, rather than just react to wavelengths? Machines today can simulate intelligence but lack this inner perspective.

One way to approach this is by listing the properties of consciousness. These include:

- Ability to realize environment through feelings, experience and wishes of physical body;

- Ability to reflex and self realize based on new experience and knowledge;

- Ability to continuously adapt and self-develop, discover new qualities under pressure of circumstances and contradictory wishes;

- Continuous internal conflict that makes us adapt and pushes us to grow.

For organisations exploring AI, this distinction matters. When someone asks whether AI “thinks” or “understands,” the right answer is: it processes, predicts, and recommends – nothing more.

Why This Matters for AI and How Machines Fit in the Spectrum

At S-PRO, we often meet clients who ask: “When will AI become conscious?” But this question misses the point. The systems we design don’t need consciousness to create value. What they need is intelligence – the ability to learn patterns, solve problems, and adapt in useful ways.

Machine intelligence is engineered for efficiency and optimisation. It occupies its own niche in this debate. Machines outperform humans in crystallized domains like chess, translation, or pattern recognition. Their artificial “neuron” counts now reach staggering billions. But they lack both fluid adaptability and consciousness.

That’s why understanding these distinctions isn’t just academic. It guides technical decisions. When we design AI for industries like finance or healthcare, we’re not chasing neuron counts or speculating about machine souls. We’re building systems that operate within clear definitions of intelligence, while acknowledging the philosophical gap of consciousness.

For us as a software development company, the lesson is practical. When building AI solutions, the right question isn’t “can AI match human intelligence?” but “what kind of intelligence does this organisation need to scale?” Seen this way, intelligence is less a hierarchy and more a spectrum – and the smartest move is knowing which part of that spectrum to harness.