Our team at S-PRO has made the TravelPlanBooker experience even more seamless and productive with an AI-based assistant designed to build a detailed personalized trip itinerary from just one user query. The tool recommends popular verified destinations, calculates efficient routes, and plots a detailed travel schedule with various attractions to visit each day, leaving only one thing that the user needs to do: click “Book” and enjoy an unforgettable trip.

Advanced travel planning tool

- Geography Switzerland

- IndustryTech, Travel

- ServicesInternal operations optimization

Intro

Brief

Our client analyzed the main competitors, looked at their shortcomings, and decided they wanted to create a system that would plan a complete trip and only require the user to click “Book”. A user would be able to buy airline tickets, rent a car, book accommodation, and so on after describing their expectations for the trip (where to go, with whom, what locations to see, and so on).

We successfully implemented this feature. Now, the platform has a dedicated AI chatbot page where a user enters trip dates, a destination, and the number of people and gets a trip designed around those preferences.

Revolutionized Travel Planning with Al

- Introduced an AI-based travel planning feature that transformed the user experience.

- Enabled users to effortlessly create personalized itineraries with just one query.

Comprehensive Trip Management

- Developed a comprehensive system covering travel planning, route optimization, ticket booking, and accommodation booking.

- Streamlined the entire trip planning and booking process into a single platform.

Overcoming Technological Challenges

- Successfully addressed challenges related to ChatGPT response speed by optimizing workflows and introducing a caching layer.

- Demonstrated the ability to optimize and enhance the platform’s performance in the face of complex technical obstacles.

User-Friendly Interface and Real-Time Adjustments

- Implemented a user-friendly chatbot interface allowing users to describe their trips and make real-time adjustments to proposed plans.

- Elevated the user experience by providing an interactive and dynamic platform.

Competitive Edge and Vision Realization

- Responded to client needs by creating a system that plans complete trips with a single click, addressing competitors’ shortcomings.

- Successfully realized the client’s vision of an AI-driven platform that simplifies and enhances the entire travel planning and booking process.

Features

The AI-based travel planning feature then generates and offers the user a draft trip itinerary with a list of locations and a description of what they can do/see there. For example: “Traveling to London for three days, you’ll visit the British Museum, the National Gallery, and the Victoria and Albert Museum. You will visit good places to eat such as Scully St. James’s, Ikoyi Restaurant, and Mr. White’s restaurant. And you will go on a tour of Buckingham Palace.” The user can adjust the proposed plan in the chat-like window. It is similar to the GPT playground, with every response of the AI-based travel assistant being a high-level travel plan.

When we started working on this AI-based feature that combines travel planning, route optimization, and ticket and accommodation booking, we realized that it was very important that the locations provided to the user were relevant to their request and logical from the logistics point of view. It needed to be convenient for travelers to get from one place to another, and there needed to be a logical sequence in the proposed route. Therefore, in addition to ChatGPT requests and integrations with the client’s APIs, our team wrote an algorithmic module to optimize the route generated by ChatGPT.

Challenges

The first was the ChatGPT response speed. Many expect ChatGPT to respond to all user queries instantly and without any errors. In practice, it doesn’t turn out like that since ChatGPT model is quite large and the OpenAI API demand is growing every day. Therefore, even basic requests that the user makes to our interface in TravelPlanBooker take five to ten seconds to fulfill for a simple trip with one or two locations. A longer and more complex trip can take up to 30 seconds. Because of this, we had many discussions with our client, who expected a quicker time response. The latency of LLM-based chatbots is a serious challenge that we and many other AI teams tackle.

To mitigate this problem, we optimized our workflow and parallelized calls to various APIs as much as possible. Also, we introduced a caching layer for various API responses that can be reused.

Such measures speed up the platform’s response. However, the main bottleneck remains: the response speed of ChatGPT API itself.

The second challenge was the quality consistency of ChatGPT’s responses. In every LLM-based project, the risk of the model’s hallucinations should always be considered. Let’s say that in one case out of 100, the model comes up with a non-existent city or location. We eliminated this problem by verifying locations and attractions produced by ChatGPT. To do this, we send a selected location to MapBox (an API similar to Google Maps for verifying objects, names, and locations) to validate it and build a route. We also check locations in the client’s АРI for availability in the database. However, this still does not give a 100% guarantee that the model will not, for example, respond to the user in French just because they want to go to France (even though the request was given in English). We take certain actions here to minimize the occurrence of such issues, but there is still a slight chance that they can happen.

This was a challenging but rewarding LLM project for us, as now we better understand the challenges that arise in such projects and can find ways to overcome them while meeting the client’s expectations.

Results

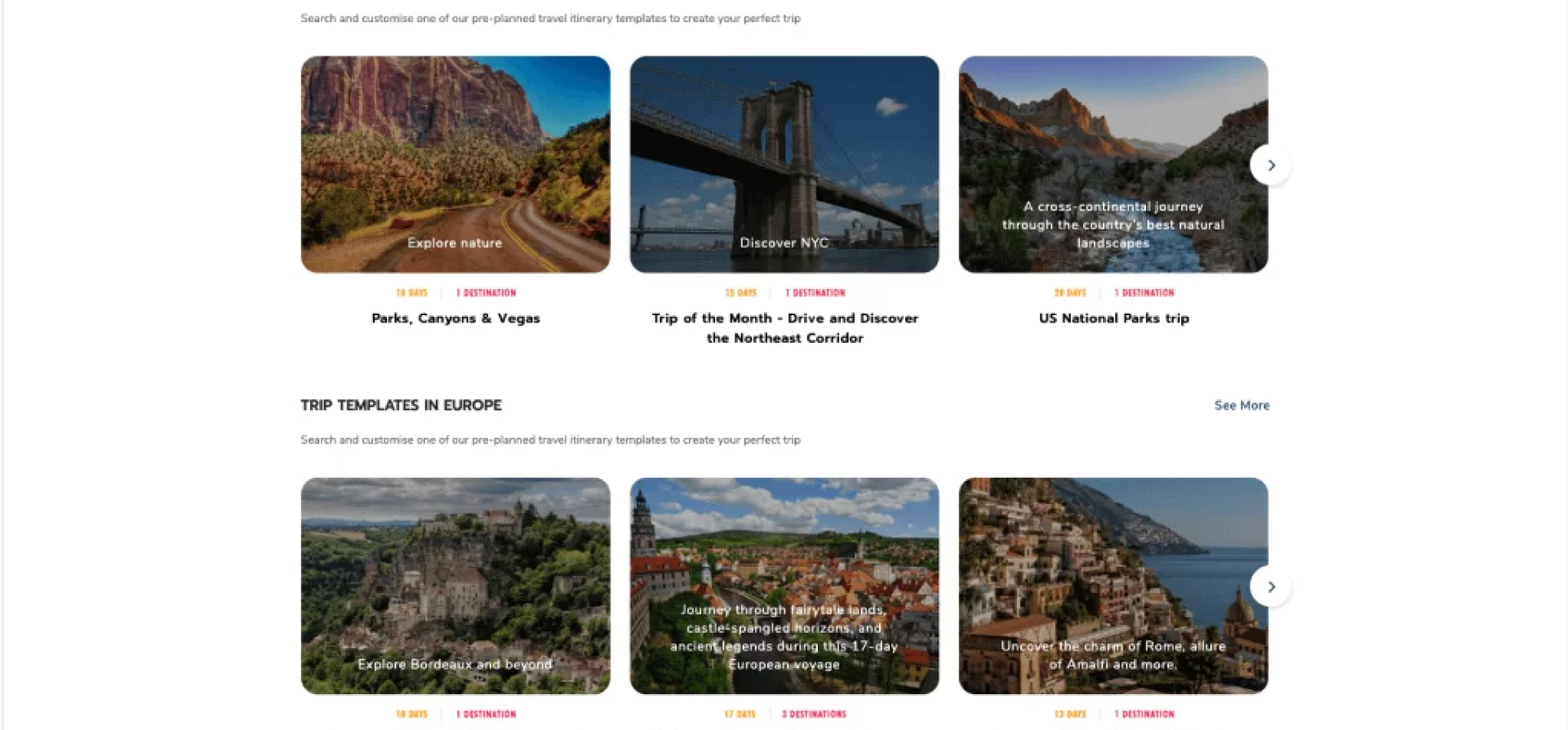

With just one query, users can effortlessly create personalized itineraries. The AI chatbot recommends verified destinations, optimizes routes, and schedules attractions for each day. Features include travel planning, route optimization, and ticket and accommodation booking. Users describe their trips and receive draft plans with location recommendations and activity options. The interactive interface allows users to make adjustments in real time. Our team developed this feature from scratch, meeting the client’s vision. TravelPlanBooker’s AI assistant and planning tool elevate the travel experience, offering tailored plans based on preferences.

Project overview

- Travel planning

- Route optimization

- Ticket booking

- Accommodation booking

- Python

- MapBox

- Google Colaboratory

- Scipy

- Open AI API

- Angular

- Langchain

- Founder

- Product Owner

- Solution Architect

- Backend Engineer

- Frontend Engineer

- Solution Design

- Discovery Phase

- Frontend Development

- Backend Development

- LLM-Based Chatbot Development

- Maintenance and Support

- 2 months

Case studies

- Finance

- Energy

- Healthcare

- Manufacturing

Get in touch

- Drop us a line:

- Contact us:

QA

QA

UK

UK

US

US

CH

CH